Alpha Pilot

|

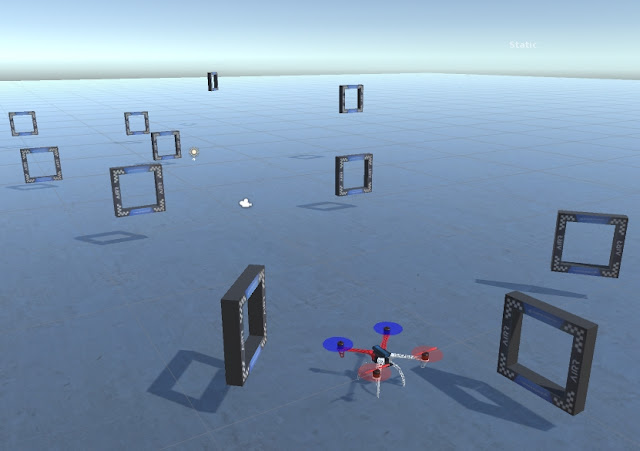

| The F450 quadcopter model flying around some AIRR gates in our simulator. |

For the last few months we've been having a go at the Lockheed Martin and MIT Alpha Pilot competition. The idea behind this is to create the software to fly a drone autonomously on a Drone Racing League (DRL) course in their new Artificial Intelligence Robot Racing League (AIRR). The top teams from the competition get given drone development kits and have the chance to fly for real. The competition was in the form of three tests, where test 1 was a questionnaire, test 2 was the vision test and test 3 was the navigation and control test around a set of simulated gates.

|

| AIRR DRL gate from the test 2 "vision test". |

The image above shows the design of one of the AIRR gates that the drones have to fly through. Test 2 was an object detection and localisation algorithm test to pick out the four centre corners of the gate which bound the flyable region. Basically, we were given 10,000 images of a gate in a warehouse taken from different angles and using varying lighting conditions. There was a set of ground truth data to go with these images, so we were able to train a convolutional neural network to find the four corners.

Now, the really great part of the competition was that there was a leader board, which teams could post their results files to. "Ready Player One" anybody?

|

| This is the Test 2 Leader Board. I'm not saying where we are on there, but we're not last. |

In order to get on the leader board, we had a set of 1,000 images, so you would run your algorithm against the "unseen" images, generate a result set and get a score based on the mAP, or mean average precision match between your data and theirs using the intersection over union (IoU) metric. This showed up the first problem with the test, namely that their submission code was just plain wrong. It took me quite a few attempts to figure out that you had to submit exactly two gate predictions for each image, otherwise you would just get an "incorrect format" email at the next day's scoring. Also, it was really hard for us being 12 hours out of the main time zone. We had to submit our files by 8am (their 5pm), then wait until 5pm for the new leader board scores. By that point, our day's work was almost over, so, despite being 12 hours ahead, we were always a day behind.

When the final code was submitted, there was also a score for algorithm speed, which is where we might have gone a bit wrong. We built a very simple algorithm which traded speed for accuracy and saw our results get pushed right down to near the bottom of the leader board. Oh, well, it was fun while it lasted and we learnt a lot about object localisation.

|

| Test 3, guidance, navigation and control, running in our own simulator. |

Then there was test 3, which was a bit of a disaster for us. This was so disappointing, because it is such a fun test to write an algorithm which makes a drone fly autonomously around a course. The problem we had was that the test specified Ubuntu 16.04.5 and the Robot Operating System (ROS) Kinetic version, along with an MIT built flight simulator. To cut a long story short, we spent the best part of 5 weeks trying to get their simulator to work. The problem was that it required such a high specification of computer: i9 Extreme, 32GB RAM, Titan Z graphics card, and then they insist on using a legacy Ubuntu operating system and an old version of ROS. Despite having 15 high end Alienware graphics workstations, we couldn't just go and re-install one with an old version of Ubuntu because they are needed for our day to day AR and VR work. We ended up building a machine from scratch by ripping the disks out and putting Ubuntu onto a fresh disk, but still couldn't get the flight simulator to run, hitting graphics card issues every time. This graphics requirement means that virtualisation won't work (no direct access to graphics hardware), but Amazon Web Services (AWS) could have been another option. It's just that we can't spend the money required for AWS on a competition of this nature, where the chance of success is very low. We tried about 25 configurations of the hardware and software, but never got to the point where we were getting back the gate position indicators that we needed from their simulator. This was principally because the simulator kept crashing. Just bear in mind that installing all the required software was a distinctly non trivial task, which took about half a day, and you can see that we wasted all our time on systems installation and spent virtually no time at all on our own flight algorithm. In frustration I gave up with 5 days to go and implemented something in our own QuadSim flight simulator, just so I would be able to submit a 2 page algorithm description, even though I can't submit any code. You never know, it might be a sufficiently interesting algorithm for them to come back to us, but I seriously doubt that we'll make it through the qualification stage on the basis of our submission. From what I was reading on the test 3 forum, lots of other people had the same problems. The simulator didn't work and there were lots of questions about how the automatic submission and testing system will work.

Anyway, once I started actually developing something in our own simulator it was fun again. And that started me thinking about what to do with everything I've learnt from this exercise. It's been a catalyst for new ideas and made me wonder whether AIRR is actually missing the point? You don't need big, powerful, dangerous, 80mph drones to have fun with AI. And that's all I'm going to say for now.

Comments

Post a Comment