HeroX.com Mars XR Competition

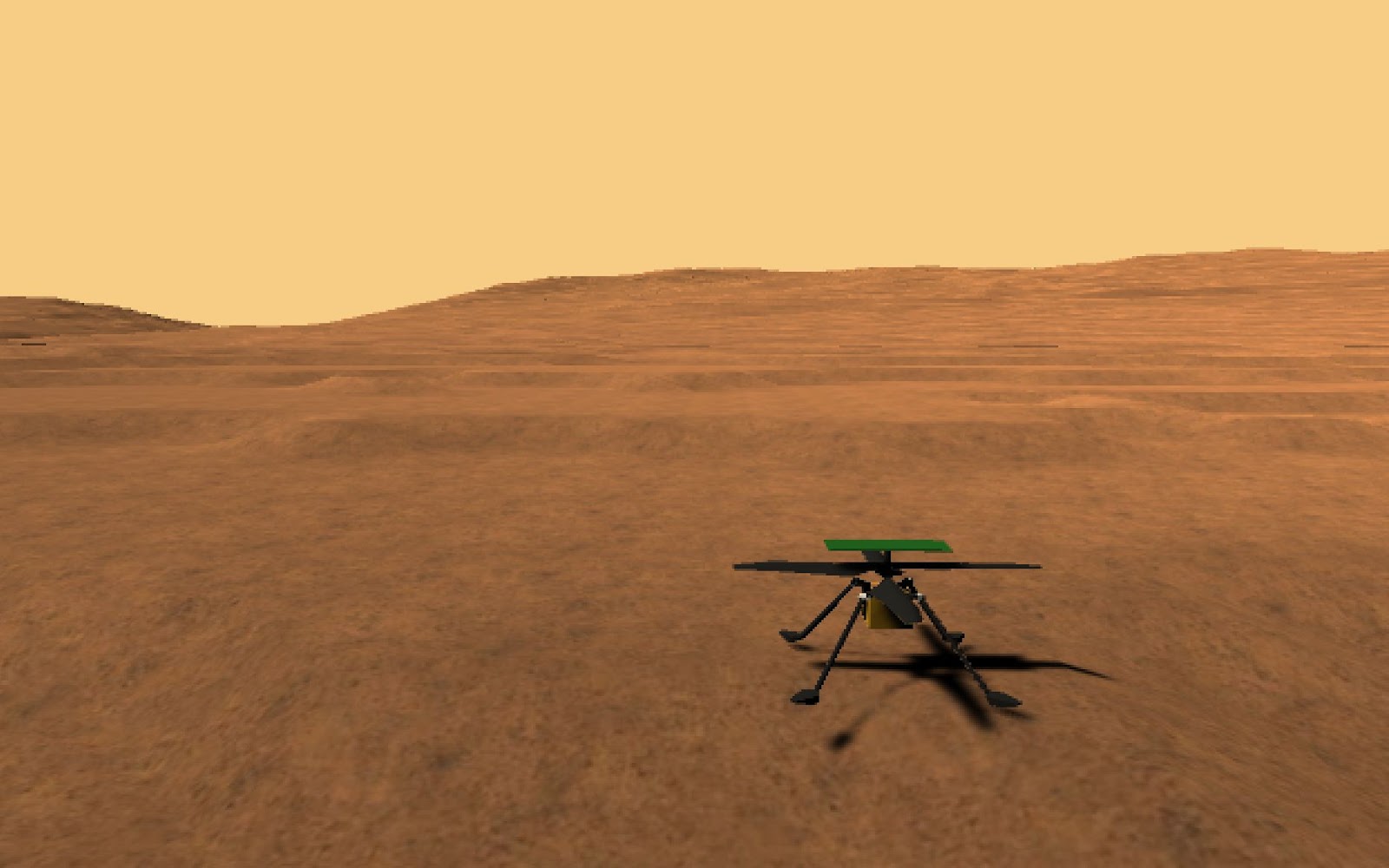

Over the last three months we've been working on NASA's XOSS Mission Control Mars XR competition on herox.com. You can see our attempts at making a drone model using Unreal Engine Blueprints in the video above.

Things didn't go entirely to plan, though. Firstly, it took about a month of trying to make a Valve Index VR headset work on a gaming laptop, before giving up and buying a big graphics card and a new power supply to completely rebuild a desktop computer. The VR headset then worked, but the software wouldn't install from the Epic Games site. When we finally had a working application to develop on, almost half of the time had already gone.

Playing with the simulation of Jezero Crater using the VR goggles and hand controllers to control the astronaut was utterly fantastic. Standing on the planet surface watching the Sun go down behind a crater looked spectacular in 3D.

The default scene puts you in front of a table containing some tools, with a Mars rover called a "Multi-Mission Surface Exploration Vehicle", or MMSEV, and a small robotic rover in the background.

So, you work out how to pick up the hammer, and what is the first thing you do with it? See how far you can throw it in the 1/3rd gravity obviously!

And this is the point where we thought, "I wonder how high you could throw a hand launch glider?".

After exploring the application a bit more, there was a wealth of assets with which to make our XR mission, including an "AdvancedDroneSystem" Blueprint which was activated by pressing the "V" key and flying around with the keyboard. This has a very cool feature, where you can switch to a thermal or infra-red camera, which post processes the image to make it look like a thermal or IR camera.

|

| Infra-red view |

|

| Infra-red view, at night |

|

| Visible light view, at night |

The brief for the competition was to make an XR mission, fitting one of the following categories: Set Up Camp, Maintenance, Scientific Experiments, Exploration, or Blow Our Minds. Obviously, the idea is to build a game level for something the astronaut can do on an EVA, like descending a rope to get down into a crater, setting up a scientific experiment on the surface or cleaning the Martian dust off of the solar panels. However, having flown the drone around and discovered the lava caves, we came up with something a bit different.

We're the "Centre for Advanced Spatial Analysis", so exploration, remote sensing and making 3D models from images is our area of expertise. If we're going to explore Mars, then doing it from the air allows you to cover more ground. The question is, "how does an astronaut control a drone while wearing very restrictive EVA gloves?".

|

| This is a good picture of me in the bulky suit trying to fly the drone. Sorry my hands aren't in the picture, but it's virtually impossible to get everything in the camera at the same time. |

We have some previous form when it comes to flying simulators and real drones using hand movements. The links section at the end contains examples of our previous work. This all stems from when we did the UK Big Bang Science fair in 2018 and had the idea to use hand tracking to fly our Lima simulator, rather than a regular joystick, because we expected little hands to be coming in from all directions wanting to fly our drone. There's one great picture where we're somewhere in the middle of a huddle of kids, so it turned out to be a very good idea. After that, we were just surprised at how little research targeted these types of human factors in UAV flight systems. The DJI motion controller is the only device to really try and innovate in the area of flight control systems recently. Other than that, drone controllers tend to be four axis joysticks.

We now had the genesis of an idea, even if it probably wasn't quite what the architects of the Mission Control simulator had in mind when they came up with the brief for the competition. The fact is, if you want to do any serious exploration on Mars, then you need to fly. Also, there are areas that are going to be dangerous for humans to explore and areas that need surveying to ensure that they are safe. All that was left now was to port our drone control expertise over to Unreal Engine.

The first month was spent trying to get the Valve Index VR headset to work with our gaming laptop. In the end I had to buy a new graphics card for my desktop machine, plus a new power supply, and rebuild the machine in order to run the virtual reality. Then we couldn't get the software moved over from the gaming laptop to the desktop machine. All it said was, "it's already in your library". I know, but it's in my library on a different computer. In the end, though, we finally made it work and there was still half of the original three months left. I thought we were in good shape, at least I did until I started trying to program with Unreal.

Firstly, the Valve Index can only be described as flaky at best. Windows kept telling me that the drivers had failed. Then there was Unreal and the Blueprints system. I'm a developer with over twenty years experience who used to work in the games industry, but this was my first time using Unreal. I've read everything I could find, but when opening it up for the first time, the impression I got was how much more complicated it is than Unity 3D. They're very different things and Unreal felt a lot more like the old games programming that I used to do.

This was where I hit the next brick wall. Whatever I did, I could not manage to get any inputs through to the drone model that I had created. My first test was to see if a button press could be used to rotate my drone model about its yaw axis. Nothing. Absolutely nothing worked for weeks. Even one of the official Unreal videos on character control mentioned that there was a certain amount of "weirdness" in how Unreal handles input events. To cut a long story short, the Unreal videos say that a non-player character (NPC) should be derived from a "pawn". This is how the existing "AdvancedDroneController" scripts provided with the Mission Control program worked. What they don't tell you, though, is that pawns don't receive inputs (sometimes, maybe, remember the weirdness?). The player character has to "possess" the pawn in order to control it, but this isn't what I was trying to do. I wanted to be able to stand there in my EVA suit and fly the drone from a 3rd person view using my hand controller, while being able to switch to 1st person with a button. What I needed was to derive my drone class from an "Actor", not a "Pawn". I eventually figured this out from one of the Unreal forum posts about five days before the deadline. The thing is, you can get inputs to a pawn if you do it in a certain way, which is what confused me. I could press a key and see the drone rotate, but when I tried it in a different way in the Blueprint I got nothing, while it's just plain impossible to get it to react to the player's controller. When I tried making the controller's trigger button rotate the drone, or I tried making it react to the character's hand pose, then I got nothing. It needs to be an actor.

At this point, I basically had the last weekend to do almost everything. I went from no control on Saturday, to a flying drone that was hard to fly and gradually honed the flight model over the course of Sunday. These are the Blueprints that I came up with:

You see the problem? It's a horrendous mess of spaghetti code. Give me C++ any day, but we were locked out of real code and had to use Blueprints.

This is the rotation code:

And this is the translation code:Basically, it's a constant velocity controller, so you use the hand to tilt in the direction that you want to translate and it holds the height constant and regulates the speed in that direction. Moving the hand up and down controls the height. The further you move from the zero height the faster the vertical velocity.

There was just one more problem to overcome in Unreal before I could get to this point, though. My testing had been using the left hand. The way I was getting the hand pose from the character, you could specify left or right hand, plus a lot of other joints. So, I switched the drop down from left hand to right hand and ran the simulation. It's still the left hand. You have got to be joking. The Blueprint element that I was using could only get the left hand data, so I had to throw it away and find a different way of getting the joint information. If you look at the Blueprints, it's basically just a lot of breaking vectors up into components and re-compositing them before setting the rotation vector and translation velocities.

I couldn't use Unreal's inbuilt physics engine, so the drone simulation is terrible and it has no inertia. When I switched the physics on I lost the ability to set the velocities, so would have needed PID controllers to make it fly using force and acceleration. Time was against me, so I had to abandon that idea, but it's what we do in our own Lima simulator. Actually, it's very easy to write a drone simulation, and I'm wondering whether it is actually possible using Blueprints, so that's something I might try at a later date? I would like to re-visit this and see if I can make a better job of it, but that was the best I could do in the time available. After all the work I just wanted to submit something, and flying a drone with your hand into the lava caves and along the tunnels might just be crazy enough to get a mention from the judges.

|

| Our own virtual Mars in Unity 3D |

Finally, we've been writing a drone book and one of the chapters is on how to use remote sensed data to create your own Mars simulation in Unity 3D. This was written long before the HeroX MarsXR competition, so I might go back and have another look at this with a view to making a more detailed landscape using higher resolution data. I only used the coarse-grained remote sensed data in my original code to make it easier for people to do it themselves. It actually doesn't look too bad, but not in the same league as the Mars XR planet. Watch out for a blog post on this later.

So that was our little diversion into flying drones in the lava caves of Mars. Despite all the coding problems it was still a lot of fun. I just wish I had more time, but there's a possibility that they might run the competition again next year.

LINKS

- First use of the Leap Motion controller at the UK's Big Bang Science Fair [link]

- Long video of flying our Lima simulator with the hand controller [link]

- Flying a real drone with your hand, a Leap Motion and Unity 3D [link]

- Flying a regular flight simulator with your hand [link]

Comments

Post a Comment